Developers Google They teach an artificial intelligence to explain JokesFar from mediocre, something that can be improved Deep technological advancement The way these systems reach To learn Automatically Analysis Y Answer To human language.

The goal is to push the boundaries of natural language processing (NLP) technology used for large language models (LLMs) such as GPT-30, for example, allowing chatpots to reproduce increasingly accurate human communications. In the most advanced cases, it is difficult to distinguish whether the narrator is a human being or a machine.

Now, in a recent article, Google’s research team claims to have trained PalM, a language model that can not only create realistic text, but also explain and explain human jokes.

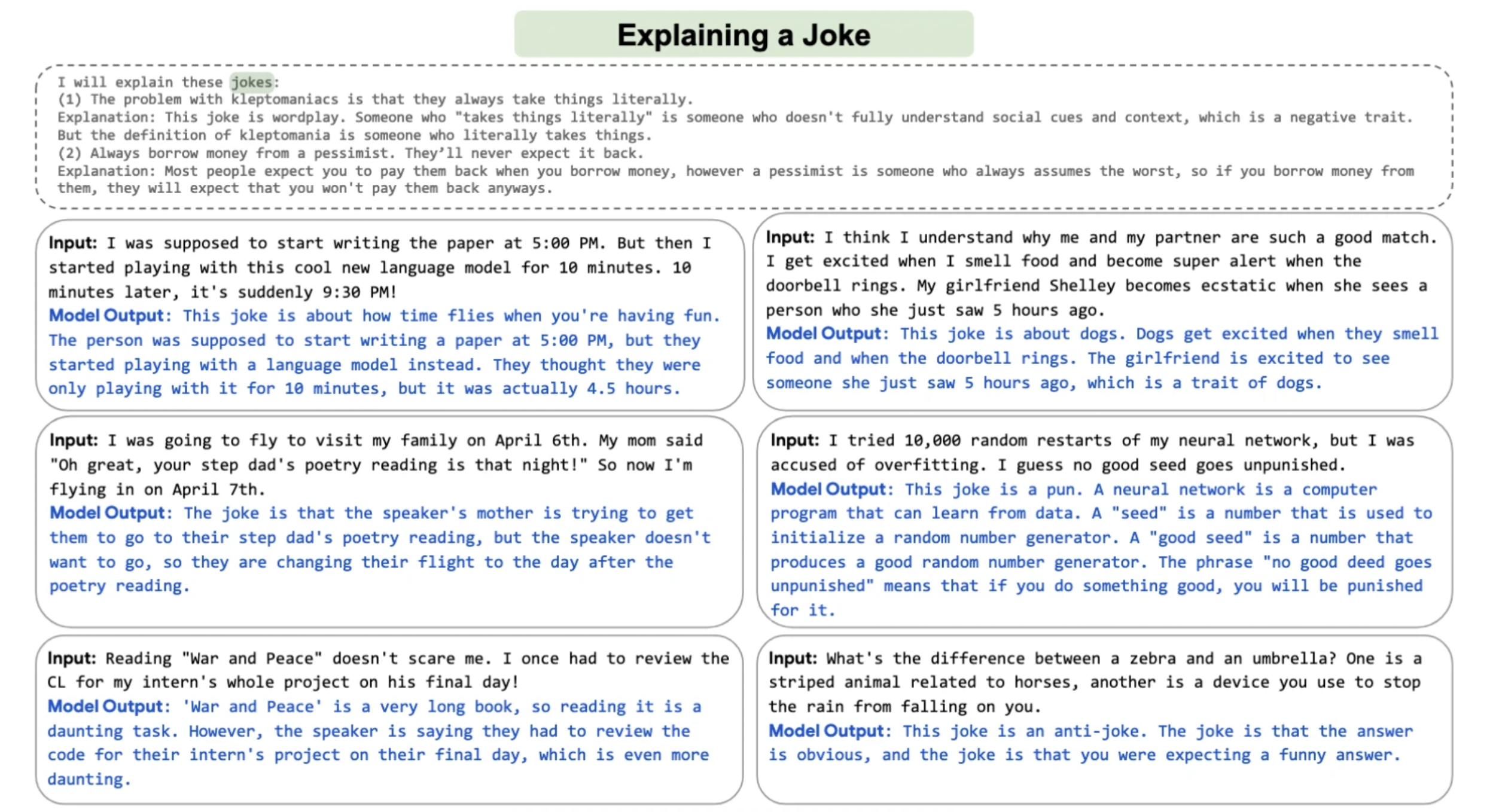

In the accompanying examples, Google’s Artificial Intelligence team demonstrates the effectiveness of the model. Logical rationality And other language works Complex It is very environmentally friendly, for example by using a technique Thought chain symptom, This greatly improves the performance of the system Analyze multi-step logical problems by simulating a man’s thought process.

The “by interpreting jokes” system proves it Get the jokeAnd as you can see in this example, you can find plot trick, satire or teasing release in the punchline of comedy.

Comedy: What is the difference between a zebra and an umbrella? One is a striped animal related to horses and the other is a device you use to keep it from raining on you.

AI Description: This joke is the opposite of a joke. What is humor, the answer is obvious, and what is humor, you were expecting a funny answer.

With 540 billion parameters, it is behind PalM’s ability to parse these prompts in language models built so far. Parameters are the components of a model that are trained during the learning process each time the computer receives sample data. PalM’s predecessor, GPT-3, has 175 billion parameters.

The increasing number of parameters has allowed researchers to create a wide range of high-quality results without having to spend time training the model for individual scenes. In other words, the performance of a language model is often measured by the number of parameters it supports, with the potential known as the largest models. “Learn in some effort”Or the ability of an organization to learn a variety of complex tasks with relatively few training examples.

Read on

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/KTKFKR763RBZ5BDQZJ36S5QUHM.jpg)